k1lib.cli module

Setup

To install the library, run this in a terminal:

pip install k1lib[all]

If you don’t want to install extra dependencies (not recommended), you can do this instead:

pip install k1lib

To use it in a python file or a notebook, do this:

from k1lib.imports import *

Because there are a lot of functions with common names, you may have custom functions or

classes that have the same name, which will override the functions in the library. If you

want to use them, you can use cli.sort() instead of sort() for example.

Intro

The main idea of this package is to emulate the terminal (hence “cli”, or “command line interface”), but doing all of that inside Python itself. So this bash statement:

cat file.txt | head -5 > headerFile.txt

Turns into this statement:

cat("file.txt") | head(5) > file("headerFile.txt")

Let’s step back a little bit. In the bash statement, “cat” and “head” are actual programs

accessible through the terminal, and “|” will pipe the output of 1 program into another

program. cat file.txt will read a file and returns a list of all rows in it, which

will then be piped into head -5, which will only return the first 5 lines. Finally,

> headerFile.txt will redirect the output to the “headerFile.txt” file. See this video

for more: https://www.youtube.com/watch?v=bKzonnwoR2I

On the Python side, “cat”, “head” and “file” are Python classes extended from BaseCli.

cat("file.txt") will read the file line by line, and return a list of all of them. head(5)

will take in that list and return a list with only the first 5 lines. Finally, > file("headerFile.txt")

will take that in and writes it to a file.

You can even integrate with existing shell commands:

ls("~") | cmd("grep *.so")

Here, “ls” will list out files inside the home directory, then pipes it into regular grep on linux, which is then piped back into Python as a list of strings. So it’s equivalent to this bash statement:

ls | grep *.so

Let’s see a really basic example:

# just a normal function

f = lambda x: x**2

# returns 9, no surprises here

f(3)

# f is now a cli tool

f = aS(lambda x: x**2)

# returns 9, demonstrating that they act like normal functions

f(3)

# returns 9, demonstrating that you can also pipe into them

3 | f

Here, aS is pretty much the simplest cli available. It just makes whatever

function you give it pipe-able, as you can’t quite pipe things to lambda functions in vanilla Python.

You can think of the flow of these clis in terms of 2 phases. 1 is configuring what you want the cli to do, and 2 is actually executing it. Let’s say you want to take a list of numbers and take the square of them:

# configuration stage. You provide a function to `apply` to tell it what function to apply to each element in the list, kinda like Python's "map" function

f = apply(lambda x: x**2)

# initialize the input

x = range(5)

# execution stage, normal style, returns [0, 1, 4, 9, 16]

list(f(x))

# execution stage, pipe style, returns [0, 1, 4, 9, 16]

list(x | f)

# typical usage: combining configuration stage and execution stage, returns [0, 1, 4, 9, 16]

list(range(5) | apply(lambda x: x**2))

# refactor converting to list so that it uses pipes, returns [0, 1, 4, 9, 16]

range(5) | apply(lambda x: x**2) | aS(list)

You may wonder why do we have to turn it into a list. That’s because all cli tools execute things lazily, so they will return iterators, instead of lists. Here’s how iterators work:

def gen(): # this is a generator, a special type of iterator. It generates elements

yield 3

print("after yielding 3")

yield 2

yield 5

for e in gen():

print("in for loop:", e)

It will print this out:

in for loop: 3

after yielding 3

in for loop: 2

in for loop: 5

So, iterators feels like lists. In fact, a list is an iterator, range(5), numpy arrays

and strings are also iterators. Basically anything that you can iterate through is an

iterator. The above iterator is a little special, as it’s specifically called a “generator”.

They are actually a really cool aspect of Python, in terms of they execute code lazily, meaning

gen() won’t run all the way when you call it. In fact, it doesn’t run at all. Only once you

request new elements when trying to iterate over it will the function run.

All cli tools utilize this fact, in terms of they will not actually execute anything unless you force them to:

# returns "<generator object apply.__ror__.<locals>.<genexpr> at 0x7f7ae48e4d60>"

range(5) | apply(lambda x: x**2)

# you can iterate through it directly:

for element in range(5) | apply(lambda x: x**2):

print(element)

# returns [0, 1, 4, 9, 16], in case you want it in a list

list(range(5) | apply(lambda x: x**2))

# returns [0, 1, 4, 9, 16], demonstrating deref

range(5) | apply(lambda x: x**2) | deref()

In the first line, it returns a generator, instead of a normal list, as nothing has actually been

executed. You can still iterate through generators using for loops as usual, or you can convert it

into a list. When you get more advanced, and have iterators nested within iterators within iterators,

you can use deref to turn all of them into lists.

Also, a lot of these tools (like apply and filt)

sometimes assume that we are operating on a table. So this table:

col1 |

col2 |

col3 |

|---|---|---|

1 |

2 |

3 |

4 |

5 |

6 |

Is equivalent to this list:

[["col1", "col2", "col3"], [1, 2, 3], [4, 5, 6]]

Warning

If you’re not an advanced user, just skip this warning.

All cli tools should work fine with torch.Tensor, numpy.ndarray and pandas.core.series.Series,

but k1lib actually modifies Numpy arrays and Pandas series deep down for it to work.

This means that you can still do normal bitwise or with a numpy float value, and

they work fine in all regression tests that I have, but you might encounter strange bugs.

You can disable it manually by changing settings.startup.or_patch like this:

import k1lib

k1lib.settings.startup.or_patch.numpy = False

from k1lib.imports import *

If you choose to do this, you’ll have to be careful and use these workarounds:

torch.randn(2, 3, 5) | shape() # returns (2, 3, 5), works fine

np.random.randn(2, 3, 5) | shape() # will not work, returns weird numpy array of shape (2, 3, 5)

shape()(np.random.randn(2, 3, 5)) # returns (2, 3, 5), mitigation strategy #1

[np.random.randn(2, 3, 5)] | (item() | shape()) # returns (2, 3, 5), mitigation strategy #2

Again, please note that you only need to do these workarounds if you choose to turn off C-type modifications. If you keep things by default, then all examples above should work just fine.

All cli-related settings are at settings.cli.

Argument expansion

I’d like to quickly mention the argument expansion motif that’s prominent in some cli tools. Check out this example:

[3, 5] | aS(lambda a: a[0] + a[1]) # returns 8, long version, not descriptive elements ("a[0]" and "a[1]")

[3, 5] | ~aS(lambda x, y: x + y) # returns 8, short version, descriptive elements ("x" and "y")

[[3, 5], [2, 7]] | apply(lambda a: a[0] + a[1]) | aS(list) # returns [8, 9], long version

[[3, 5], [2, 7]] | ~apply(lambda x, y: x + y) | aS(list) # returns [8, 9], short version

Here, the tilde operator (“~”, officially called “invert” in Python) used on aS and

apply means that the input object/iterator will be expanded so that it fills all

available arguments. This is a small quality-of-life feature, but makes a big difference, as parameters

can now be named separately and nicely (“x” and “y”, which can convey that this is a coordinate of some

sort, instead of “a[0]” and “a[1]”, which conveys nothing).

Inverting conditions

The tilde operator does not always mean expanding the arguments though. Sometimes it’s used for actually inverting the functionality of some clis:

range(5) | filt(lambda x: x % 2 == 0) | aS(list) # returns [0, 2, 4]

range(5) | ~filt(lambda x: x % 2 == 0) | aS(list) # returns [1, 3]

[3, 5.5, "text"] | ~instanceOf(int) | aS(list) # returns [5.5, "text"]

Capturing operators

Some clis have the ability to “capture” the behavior of other clis and modify them on the fly. For example, let’s see tryout(), which catches errors in the pipeline and returns a default value if an error is raised:

"a3" | (tryout(4) | aS(int)) | op()*2 # returns 8, because int("a3") will throws an error, which will be caught, and the pipeline reduces down to 4*2

"3" | (tryout(4) | aS(int)) | op()*2 # returns 6, because int("3") will not throw an error, and the pipeline effectively reduces down to int("3")*2

"3" | tryout(4) | aS(int) | op()*2 # throws an error, because tryout() doesn't capture anything

Just a side note, op() will record all operations done on it, and it will replay those operations on anything that’s piped into it.

In the first line, tryout() | aS(int) will be executed first, which will lead to tryout()

capturing all of the clis behind it and injecting in a try-catch code block to wrap all of them

together. In the third line, it doesn’t work because "3" | tryout(4) is executed first,

but here, tryout() doesn’t have the chance to capture the clis behind it, so it can’t inject

a try-catch block around them. This also means that in the 1st and 2nd line, the final multify-by-2

step is not caught, because tryout() is bounded by the parentheses. If you’re composing this

inside of another cli, then the scope is bounded by the outside cli:

range(5) | apply( tryout(-1) | op()**2) | deref() # returns [0, 1, 4, 9, 16]. tryout() will capture op()**2

range(5) | apply((tryout(-1) | op()**2)) | deref() # returns [0, 1, 4, 9, 16], exactly the same as before, demonstrating that you don't have to wrap tryout() around another pair of braces

Cli composition

One of the very powerful things about this workflow is that you can easily combine cli tools together, to reach unfathomable levels of complexity while using very little code and still remain relatively readable. For example, this is an image dataloader built pretty much from scratch, but with full functionality comparable to PyTorch’s dataloaders:

base = "~/ssd/data/imagenet/set1/192px"

idxToCat = base | ls() | head(80) | op().split("/")[-1].all() | insertIdColumn() | toDict()

catToIdx = idxToCat.items() | permute(1, 0) | toDict()

# stage 1, (train/valid, classes, samples (url of img))

st1 = base | ls() | head(80) | apply(ls() | splitW()) | transpose() | deref() | aS(k1.Wrapper)

# stage 2, (train/valid, classes, samples, [img, class])

st2 = st1() | (apply(lambda x: [x | toImg() | toTensor(torch.uint8), catToIdx[x.split("/")[-2]]]) | repeatFrom(4) | apply(aS(tf.Resize(192)) | aS(tf.AutoAugment()) | op()/255, 0)).all(2) | deref() | aS(k1.Wrapper)

def dataF(bs): return st2() | apply(repeatFrom().all() | joinStreamsRandom() | batched(bs) | apply(transpose() | aS(torch.stack) + toTensor(torch.long))) | stagger.tv(10000/bs) | aS(list)

These 6 lines of code will read from a directory, grabs all images from the first 80 categories, splits them into train and valid sets. Then it will extend the data infinitely (so that we never run out of batches to train), load the images on multiple worker processes, do augmentations on them, renormalize them, batch them up, stack them together into a tensor, and split batches into multiple epochs.

All of that, from scratch, where you’re in control of every detail, operating in 7 dimensions, in multiple processes, in just 6 lines of code. This is just so ridiculously powerful that it boggles my mind every day. Yes, you can argue that it’s not clear what’s going on, but for a person that is already familiar with them like I do, seeing exactly how data is being transformed at every stage is quite straightforward and trivial.

Serial composition

So let’s see a few examples on how to compose clis together. Let’s say you have a list of files:

fileNames = ["a.txt", "b.txt", "c.txt"]

Let’s say you now want to read every line from every file quickly, using cli tools, and get the number of lines in each file. Instead of something like this:

sizes = []

for fileName in fileNames:

sizes.append(cat(fileName) | shape(0)) # shape(0) is kinda like aS(len). It just returns the length of the input iterator, but difference is that aS(len) can only operate on lists

…which really defeats the purpose of the elegant cli workflow, you can do:

sizes = fileNames | apply(cat() | shape(0)) | aS(list)

In this example, there is 1 “composition”: cat() | shape(0). If you check out the

docs for cat, which is used to read files, you’d know that there’re 2 modes of operation:

cat("a.txt") | shape(0) # mode 1: cat() acts like a function, returning a list of lines in the file

"a.txt" | cat() | shape(0) # mode 2: cat() acts like a cli tool, which will return a list of lines in the file when a file name is piped into it

"a.txt" | (cat() | shape(0)) # mode 2: cat() acts like a cli tool, "cat() | shape(0)" acts as a "serial" cli

s = cat() | shape(0); "a.txt" | s # equivalent to the 3rd line, but this time declaring "cat() | shape(0)" as a separate object

In the second case, "a.txt" | cat() will be executed first, then getting the number of elements will be

executed later (... | shape(0)), but in the third case, cat() | shape(0) will be executed first, which

returns the special cli serial, then the file name will be piped in later ("a.txt" | (...))

Because cli tools are also functions, which includes serial, you can pass them into other cli

tools that expects a function, like apply. You can be extra meta, like this:

# assume a.txt, b.txt, c.txt has 10, 20, 30 lines

fileNames = [["a.txt"], ["b.txt", "c.txt"]]

# returns [[10], [20, 30]]

sizes = fileNames | apply(apply(cat() | shape(0)))

# also returns [[10], [20, 30]], and is equivalent to the line above, as "apply(apply(...))" is equivalent to "(...).all(2)"

sizes = fileNames | (cat() | shape(0)).all(2)

This type of composition is quite straightforward, unlike the next 2.

“&” composition, or “oneToMany”

Take a look at this example:

arr = ["a", "b", "c"]

arr | toRange() # returns range(3), equivalent to [0, 1, 2]

arr | iden() # returns ["a", "b", "c"]

arr | (toRange() & iden()) | aS(list) # returns [range(3), ["a", "b", "c"]]

arr | toRange() & iden() | aS(list) # returns [range(3), ["a", "b", "c"]], demonstrating "&" will be executed before "|", so you don't need parentheses around it

arr | toRange() & iden() | joinStreams() | aS(list) # returns [0, 1, 2, "a", "b", "c"]

So, this will take the input iterator, duplicates into 2 versions, pipes them into the 2 clis you specified and return both of them. You can do this with as much clis as you want:

arr | toRange() & shape() & grep("a") | deref() # returns [[0, 1, 2], [3, 1], ["a"]]

arr | toRange() & (shape() & grep("a")) | deref() # also returns [[0, 1, 2], [3, 1], ["a"]], demonstrating a strange edge case that parentheses won't stop all clis adjacent to each other joined by "&" from combining together

Hopefully it now makes sense why it’s called “oneToMany”, as we’re making 1 iterator available

for many clis. Also, if the exact cli operation is only known at run time, then you can

procedurally do this using oneToMany.

“+” composition, or “mtmS”

Take a look at this example:

even = filt(lambda x: x % 2 == 0)

odd = filt(lambda x: x % 2 == 1) # can also just be "~even", but I'm writing it out this way to be clear

[range(10, 20), range(30, 40)] | (even + odd) | deref() # returns [[10, 12, 14, 16, 18], [31, 33, 35, 37, 39]]

[range(10, 20) | even, range(30, 40) | odd] | deref() # also returns [[10, 12, 14, 16, 18], [31, 33, 35, 37, 39]], demonstrating that these are equivalent to each other

So, let’s say that there’re n items inside of the input iterator and that you specified n

clis. Then, each item will be piped into the corresponding cli, hence the name mtmS, or

“manyToManySpecific”. Why not just “mtm”? Well, there used to be a “manyToMany” operator,

but it’s been removed and I’m lazy to change it back.

Vanilla alternatives

These operations are not actually strictly necessary, they’re just convenience functions so that writing code is simpler and more straightforward. They can be implemented using normal clis like so:

a = iden()

b = apply(lambda x: x**2)

c = shape()

x = [[1, 2], [3, 4], [5, 6]]

x | a + b + c | deref() # returns [[1, 2], [9, 16], [2]]

x | ~aS(lambda x, y, z: [x | a, y | b, z | c]) | deref() # returns [[1, 2], [9, 16], [2]]

x = range(5)

x | a & b & c | deref() # returns [[0, 1, 2, 3, 4], [0, 1, 4, 9, 16], [5]]

x | aS(lambda x: [x | a, x | b, x | c]) | deref() # returns [[0, 1, 2, 3, 4], [0, 1, 4, 9, 16], [5]]

So you might want to use these vanilla versions initially if you’re having a hard time with this, but I wouldn’t recommend using vanilla in the long term.

JS transpiler

Philosophy

Just a short note: while I was developing this, the emphasis is on creating very succinct code that does a whole lot, to aid in exploring/creating datasets. Because of it, I’ve chosen to sacrifice readability. The idea is, if it’s so fast to create a functionality, whenever you need to change the code, it’ll be faster to just recreate it from scratch than try to change the existing code. And the mental effort to recreate it is substantially lower than the mental effort needed to understand a normal codebase written using vanilla Python. Also this encourages you to rethink the problem in a new light entirely, which usually results in much shorter and simpler code than if you were to adapt an existing solution. This seem to be true for me so far.

Note that creating unreadable, fantastically complicated code only happens around 5%. Majority

of the time it’s actually very readable and I can change an obscure detail after 10 seconds.

The way I usually do it is to “feel” what the data looks like, instead of trying to trace what

it looks like from the very beginning. This is possible because certain functions has certain

common signatures. For example, ~apply(lambda x,y: x+y, 3) probably means that it’s manipulating

a table with the 3rd column being a list of 2 coordinate numbers. So, overtime, you’ll develop

an intuition for what’s happenning and can visualize the data’s shape right in the middle of the

pipeline.

Where to start?

Core clis include:

These clis are pretty important, and are used all the time, so look over them to see what the library can do. Whenever you find some cli you have not encountered before, you can just search it in the search bar on the top left of the page.

Then other important, not necessarily core clis include:

So, start reading over what these do first, as you can pretty much 95% utilize everything

the cli workflow has to offer with those alone. Then skim over basic conversions in

module conv. While you’re doing that, checkout trace(),

for a quite powerful debugging tool.

There are several written tutorials about cli here, and I also made some video tutorials as well, so go check those out.

For every example in the tutorials that you found, you might find it useful to follow the following debugging steps, to see how everything works:

# assume there's this piece of code:

A | B | C | D

# do this instead:

A | deref()

# once you understand it, do this:

A | B | deref()

# assume there's this piece of code:

A | B.all() | C

# do this instead:

A | item() | B | deref()

# once you understand it, you can move on:

A | B.all() | deref()

# assume there's this piece of code:

A | B & C

# do this instead:

A | B | deref()

# assume there's this piece of code:

A | (B + C)

# do these instead:

A | deref() | op()[0] | B | deref()

A | deref() | op()[1] | C | dereF()

# there are alternatives to that:

A | item() | B | deref()

A | rows(1) | item() | C | deref()

Finally, you can read over the summary below, see what catches your eye and check that cli out.

Summary

conv |

structural |

utils |

filt |

modifier |

|---|---|---|---|---|

typehint |

inp |

output |

init |

kxml |

|---|---|---|---|---|

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

nb |

grep |

trace |

optimizations |

|---|---|---|---|

Under the hood

How it works underneath is pretty simple. All cli tools implement the “reverse or” operation, or __ror__. So essentially, these 2 statements are equivalent:

3 | obj

obj.__ror__(3)

There are several other operations that certain clis can override, like “>” or “>>”. Also, if you’re an advanced user, there’s also an optimizer that looks like LLVM, so you can implement optimization passes to speed up everything by a lot:

Creating your own cli

It’s fairly simple to create your new cli. If it’s composed of other clis, you can do something like this:

newCli = filt(lambda x: x%2==0) | head(4) | deref()

range(10) | newCli # returns [0, 2, 4, 6]

If it’s more complicated that needs to have access to some state, like a sum of numbers,

then you can extend from BaseCli like so:

class NewCli(BaseCli):

def __init__(self, bias=0):

self.bias = bias # don't necessarily have to call super.__init__()

def __ror__(self, it):

s = self.bias

for elem in it:

s += elem

return s

[range(12, 30), range(8)] | NewCli(4).all() | deref() # returns [373, 32]

Accelerations

Cli tools are pretty dynamic and clever. A lot of times, they try to understand what you’re trying to do, then rewrite your code into something else completely, but still produce exactly the desired output. For example:

["what is 3+4?", "what is 8+7?"] | apply(complete()) # returns ['0', '7']. I know, LLMs are still bad at math

np.random.randn(3, 4, 5) | apply(repeat(2).all() | transpose() | joinStreams()) # returns numpy array with shape (3, 8, 5)

On the first line, normally, complete() takes in 1 single string and also outputs a single string.

But if you know how these LLMs are run, you know that it’s a lot more efficient for the GPU to batch

multiple sentences together to generate text at the same time. So on the surface, this line seems

horribly inefficient, as it will call the model 2 times, one for each string. However, cli tools are

smart enough to realize you’re trying feed multiple things to a model, and will batch them up automatically.

On the second line, there’re lots of operations that should normally heavily bisect the input data

(a numpy array), like apply() and repeat() and whatnot, but believe it or not, cli tools are

smart enough to transform the array completely in C, and the output of the whole thing is still another

numpy array and not a nested generator. Here’s the summary of the operations that are accelerated in this

way:

========== `array | cli` and `array | cli.all(int)` capability

conv.idxsToNdArray conv.toArgmax conv.toArgmin

conv.toAvg conv.toCm conv.toFloat

conv.toImg conv.toInt conv.toMax

conv.toMedian conv.toMin conv.toNdArray

conv.toProd conv.toStd conv.toSum

conv.toTensor conv.toUnix filt.contains

filt.cut filt.filt filt.filtStd

filt.inSet filt.mask filt.rows

filt.unique models.tsne modifier.apply

modifier.clamp modifier.randomize modifier.roll

modifier.sort output.plotImgs structural.batched

structural.groupBy structural.hist structural.insert

structural.insertIdColumn structural.joinStreams structural.joinStreamsRandom

structural.peek structural.peekF structural.repeat

structural.repeatFrom structural.splitC structural.splitW

structural.transpose structural.window utils.deref

utils.ignore utils.item utils.normalize

utils.rItem utils.reverse utils.size

utils.wrapList utils.zeroes

========== `array | cli.all(int)` capability alone

bio.complement bio.idx bio.longAa

bio.medAa bio.transcribe bio.translate

conv.toAnchor conv.toAngle conv.toAudio

conv.toBytes conv.toCsv conv.toDataUri

conv.toDict conv.toDist conv.toExcel

conv.toFileType conv.toGray conv.toHtml

conv.toIso conv.toLinks conv.toList

conv.toMovingAvg conv.toPdf conv.toQr

conv.toRange conv.toRgb conv.toRgba

conv.toRoman conv.toYMD conv.toYaml

filt.breakIf filt.empty filt.head

filt.intersection filt.tail filt.trigger

filt.tryout filt.union init.BaseCli

init.mtmS init.oneToMany init.serial

inp.catPickle inp.cmd inp.kunzip

inp.kzip inp.refineSeek inp.splitSeek

inp.walk models.bloom models.complete

models.embed models.kmeans modifier.applyCl

modifier.applyMp modifier.applyS modifier.applySerial

modifier.applyTh modifier.consume modifier.integrate

modifier.iterDelay modifier.op modifier.sortF

modifier.stagger output.file output.intercept

output.pretty output.stdout output.tee

output.unpretty structural.AA_ structural.activeSamples

structural.batchedTrigger structural.count structural.insertColumn

structural.latch structural.oneHot structural.permute

structural.reshape structural.ungroup typehint.tCheck

typehint.tOpt utils.backup utils.bindec

utils.branch utils.clipboard utils.getitems

utils.iden utils.join utils.lookup

utils.lookupRange utils.rateLimit utils.resize

utils.sketch utils.smooth utils.syncStepper

utils.timeLimit

========== `array | cli` capability alone

========== No array acceleration

bio module

This is for functions that are actually biology-related

- k1lib.cli.bio.quality(log=True)[source]

Get numeric quality of sequence. Example:

# returns [2, 2, 5, 30] "##&?" | quality() | deref()

- Parameters:

log – whether to use log scale (0 -> 40), or linear scale (1 -> 0.0001)

- k1lib.cli.bio.longFa()[source]

Takes in a fasta file and put each sequence on 1 line. File “gene.fa”:

>AF086833.2 Ebola virus - Mayinga, Zaire, 1976, complete genome CGGACACACAAAAAGAAAGAAGAATTTTTAGGATC TTTTGTGTGCGAATAACTATGAGGAAGATTAATAA >something other gene CGGACACACAAAAAGAAAGAAGA TTTTGTGTGCGAATAACTATGAG

Code:

cat("gene.fa") | bio.longFa() | cli.headOut()

Prints out:

>AF086833.2 Ebola virus - Mayinga, Zaire, 1976, complete genome CGGACACACAAAAAGAAAGAAGAATTTTTAGGATCTTTTGTGTGCGAATAACTATGAGGAAGATTAATAA >something other gene CGGACACACAAAAAGAAAGAAGATTTTGTGTGCGAATAACTATGAG

- class k1lib.cli.bio.idx(fs: list = [], capture=False)[source]

Bases:

BaseCliIndexes files with various formats.

- static blast(fileName: str = None, dbtype: str = None)[source]

Uses

makeblastdbto create a blast database from a fasta file. Example:"file.fa" | bio.idx.blast() bio.idx.blast("file.fa")

- class k1lib.cli.bio.transcribe(fs: list = [], capture=False)[source]

Bases:

BaseCliTranscribes (DNA -> RNA) incoming rows. Example:

# returns "AUCG" "ATCG" | transcribe() # returns ["AUCG"] ["ATCG"] | transcribe() | deref()

- class k1lib.cli.bio.complement(fs: list = [], capture=False)[source]

Bases:

BaseCliGet the reverse complement of DNA. Example:

# returns "TAGC" "ATCG" | bio.complement() # returns ["TAGC"] ["ATCG"] | bio.complement() | deref()

- class k1lib.cli.bio.translate(length: int = 0)[source]

Bases:

BaseCli

cif module

All tools related to cif file format that describes protein structures. Expected to use behind the “cif” module name, like this:

from k1lib.imports import *

cif.cat("abc.cif")

- k1lib.cli.cif.tables(name=None, dikt=True)[source]

Loads table info. Dictionary mode:

# both return output below "1z7z.cif" | cif.tables() | op()["_audit_author"] "1z7z.cif" | cif.tables("_audit_author")

Potential output:

{'name': ("'Xiao, C.'", "'Bator-Kelly, C.M.'", "'Rieder, E.'", "'Chipman, P.R.'", "'Craig, A.'", "'Kuhn, R.J.'", "'Wimmer, E.'", "'Rossmann, M.G.'"), 'pdbx_ordinal': ('1', '2', '3', '4', '5', '6', '7', '8')}

Result is a dictionary of

table name -> dict(). That inner dictionary maps from column name to a list of elements. All columns should have the same number of elements.Table mode:

# both return output below "1z7z.cif" | cif.tables("_audit_author", dikt=False) "1z7z.cif" | cif.tables(dikt=False) | op()["_audit_author"]

Potential output:

[['name', 'pdbx_ordinal'], ["'Xiao, C.'", '1'], ["'Bator-Kelly, C.M.'", '2'], ["'Rieder, E.'", '3'], ["'Chipman, P.R.'", '4'], ["'Craig, A.'", '5'], ["'Kuhn, R.J.'", '6'], ["'Wimmer, E.'", '7'], ["'Rossmann, M.G.'", '8']]

Result is a dictionary of

table name -> List[List[str]]. So basically you’re getting the table directly.- Parameters:

name – if specified, only grabs the specified table, else returns every table

dikt – whether to return a dict or table for each table

conv module

This is for all short utilities that converts from 1 data type to another. They

might feel they have different styles, as toFloat converts object iterator to

float iterator, while toImg converts single image url to single PIL image,

whereas toSum converts float iterator into a single float value.

The general convention is, if the intended operation sounds simple (convert to floats, strings, types, …), then most likely it will convert iterator to iterator, as you can always use the function directly if you only want to apply it on 1 object.

If it sounds complicated (convert to PIL image, tensor, …) then most likely it will convert object to object. Lastly, there are some that just feels right to input an iterator and output a single object (like getting max, min, std, mean values).

- class k1lib.cli.conv.toNdArray(dtype=None)[source]

Bases:

BaseCli- blurb = 'Converts several data types to numpy.ndarray'

- __init__(dtype=None)[source]

Converts generator/several data types to

numpy.ndarray. Essentiallynp.array(list(it)). Can convert PIL Image. Example:# returns array([0., 1., 2.]) range(3) | toNdArray() # returns array([0., 1., 2.], dtype=float32) range(3) | toNdArray(np.float32)

- class k1lib.cli.conv.toTensor(dtype=None)[source]

Bases:

BaseCli- blurb = 'Converts several data types to torch.Tensor'

- __init__(dtype=None)[source]

Converts generator to

torch.Tensor. Essentiallytorch.tensor(list(it)). Default dtype is float32. Can convert PIL Image. Example:# returns tensor([0., 1., 2.], dtype=torch.float64) range(3) | toTensor(torch.float64)

- class k1lib.cli.conv.toRange[source]

Bases:

BaseCli- blurb = 'Returns iter(range(len(it))), but incrementally'

- class k1lib.cli.conv.toList[source]

Bases:

BaseCli- __init__()[source]

Converts generator to list. Example:

# returns [0, 1, 2, 3, 4] range(5) | toList() # returns [0, 1, 2, 3, 4] range(5) | aS(list)

So this cli is sort of outdated. It still works fine, nothing wrong with it, but just do

aS(list)instead. It’s not removed to avoid breaking old projects.

- class k1lib.cli.conv.toSum[source]

Bases:

BaseCli- blurb = 'Calculates the sum of a list of numbers'

- __init__()[source]

Calculates the sum of list of numbers. Can pipe in

torch.Tensorornumpy.ndarray. Example:range(10) | toSum() # returns 45 np.random.randn(2, 3, 4) | toSum().all() | shape() # returns (2,)

- class k1lib.cli.conv.toProd[source]

Bases:

BaseCli- blurb = 'Calculates the product of a list of numbers'

- __init__()[source]

Calculates the product of a list of numbers. Can pipe in

torch.Tensorornumpy.ndarray. Example:range(1,10) | toProd() # returns 362880 np.random.randn(2, 3, 4) | toProd().all() | shape() # returns (2,)

- class k1lib.cli.conv.toAvg[source]

Bases:

BaseCli- blurb = 'Calculates the average of a list of numbers'

- __init__()[source]

Calculates average of list of numbers. Can pipe in

torch.Tensorornumpy.ndarray. Example:range(10) | toAvg() # returns 4.5 [] | toAvg() # returns nan np.random.randn(2, 3, 4) | toAvg().all() | shape() # returns (2,)

- class k1lib.cli.conv.toStd(ddof: int = 0)[source]

Bases:

BaseCli- blurb = 'Calculates the standard deviation of a list of numbers'

- __init__(ddof: int = 0)[source]

Calculates standard deviation of list of numbers. Can pipe in

torch.Tensorornumpy.ndarrayto be faster. Example:range(10) | toStd() # returns 2.8722813232690143 [] | toStd() # returns nan np.random.randn(2, 3, 4) | toStd().all() | shape() # returns (2,)

- Parameters:

ddof – “delta degree of freedom”. The divisor used in calculations is

N - ddof

- class k1lib.cli.conv.toMedian(percentile=50)[source]

Bases:

BaseCli- blurb = 'Calculates the median of a list of numbers'

- class k1lib.cli.conv.toMax(default=None)[source]

Bases:

BaseCli- blurb = 'Calculates the max value of a list of numbers'

- __init__(default=None)[source]

Calculates the max of a bunch of numbers. Can pipe in

torch.Tensorornumpy.ndarray. Example:[2, 5, 6, 1, 2] | toMax() # returns 6 np.random.randn(2, 3, 4) | toMax().all() | shape() # returns (2,)

- class k1lib.cli.conv.toMin[source]

Bases:

BaseCli- blurb = 'Calculates the min value of a list of numbers'

- __init__()[source]

Calculates the min of a bunch of numbers. Can pipe in

torch.Tensorornumpy.ndarray. Example:[2, 5, 6, 1, 2] | toMin() # returns 1 np.random.randn(2, 3, 4) | toMin().all() | shape() # returns (2,)

- class k1lib.cli.conv.toArgmin[source]

Bases:

BaseCli- blurb = "Grabs the min value's index"

- class k1lib.cli.conv.toArgmax[source]

Bases:

BaseCli- blurb = "Grabs the max value's index"

- class k1lib.cli.conv.toImg(closeFig=True, crop=True)[source]

Bases:

BaseCli- blurb = 'Converts multiple data types into a PIL image'

- __init__(closeFig=True, crop=True)[source]

Converts multiple data types into a PIL image. Example:

ls(".") | toImg().all() | item() # grabs first image in the current folder torch.randn(100, 200) | toImg() # converts from tensor/array to image "abc.jpg" | toImg() | toBytes() | toImg() # grabs image, converts to byte stream, and converts back to image ["abc", "def"] | toImg() # converts paragraphs to image "c1ccc(C)cc1" | toMol() | toImg() # converts SMILES string to molecule, then to image ["ab", "bc", "ca"] | (kgv.sketch() | kgv.edges()) | toHtml() | toImg() # sketches a graphviz plot, converts to svg then renders the svg as an image df | toHtml() | toImg() # converts pandas data frame to html, then render it to image "/dev/video0" | toImg() # reads an image from the 1st camera connected to the computer 0 | toImg() # same as above

You can also save a matplotlib figure by piping in a

matplotlib.figure.Figureobject:x = np.linspace(0, 4) plt.plot(x, x**2) plt.gcf() | toImg()

Note

If you are working with image tensors, which is typically have dimensions of (C, H, W), you have to permute it to PIL’s (H, W, C) first before passing it into this cli.

Also it’s expected that your tensor image ranges from 0-255, and not 0-1. Make sure you renormalize it

- Parameters:

closeFig – if input is a matplotlib figure, then closes the figure after generating the image

crop – whether to crop white spaces around an image or not

- class k1lib.cli.conv.toRgb[source]

Bases:

BaseCli- blurb = 'Converts grayscale/rgb PIL image to rgb image'

- class k1lib.cli.conv.toRgba[source]

Bases:

BaseCli- blurb = 'Converts random PIL image to rgba image'

- class k1lib.cli.conv.toGray[source]

Bases:

BaseCli- blurb = 'Converts random PIL image to a grayscale image'

- class k1lib.cli.conv.toDict(rows=True, defaultF=None)[source]

Bases:

BaseCli- blurb = 'Converts 2 Iterators, 1 key, 1 value into a dictionary'

- __init__(rows=True, defaultF=None)[source]

Converts 2 Iterators, 1 key, 1 value into a dictionary. Example:

# returns {1: 3, 2: 4} [[1, 3], [2, 4]] | toDict() # returns {1: 3, 2: 4} [[1, 2], [3, 4]] | toDict(False)

If

rowsis a string, then it will build a dictionary from key-value pairs delimited by this character. For example:['gene_id "ENSG00000290825.1"', 'transcript_id "ENST00000456328.2"', 'gene_type "lncRNA"', 'gene_name "DDX11L2"', 'transcript_type "lncRNA"', 'transcript_name "DDX11L2-202"', 'level 2', 'transcript_support_level "1"', 'tag "basic"', 'tag "Ensembl_canonical"', 'havana_transcript "OTTHUMT00000362751.1"'] | toDict(" ")

That returns:

{'gene_id': '"ENSG00000290825.1"', 'transcript_id': '"ENST00000456328.2"', 'gene_type': '"lncRNA"', 'gene_name': '"DDX11L2"', 'transcript_type': '"lncRNA"', 'transcript_name': '"DDX11L2-202"', 'level': '2', 'transcript_support_level': '"1"', 'tag': '"Ensembl_canonical"', 'havana_transcript': '"OTTHUMT00000362751.1"'}

- Parameters:

rows – if True, reads input in row by row, else reads in list of columns

defaultF – if specified, return a defaultdict that uses this function as its generator

- class k1lib.cli.conv.toFloat(*columns, mode=2)[source]

Bases:

BaseCli- blurb = 'Converts an iterator into a list of floats'

- __init__(*columns, mode=2)[source]

Converts every row into a float. Example:

# returns [1, 3, -2.3] ["1", "3", "-2.3"] | toFloat() | deref() # returns [[1.0, 'a'], [2.3, 'b'], [8.0, 'c']] [["1", "a"], ["2.3", "b"], [8, "c"]] | toFloat(0) | deref()

With weird rows:

# returns [[1.0, 'a'], [8.0, 'c']] [["1", "a"], ["c", "b"], [8, "c"]] | toFloat(0) | deref() # returns [[1.0, 'a'], [0.0, 'b'], [8.0, 'c']] [["1", "a"], ["c", "b"], [8, "c"]] | toFloat(0, force=True) | deref()

This also works well with

torch.Tensorandnumpy.ndarray, as they will not be broken up into an iterator:# returns a numpy array, instead of an iterator np.array(range(10)) | toFloat()

- Parameters:

columns – if nothing, then will convert each row. If available, then convert all the specified columns

mode – different conversion styles - 0: simple

float()function, fastest, but will throw errors if it can’t be parsed - 1: if there are errors, then replace it with zero - 2: if there are errors, then eliminate the row

- class k1lib.cli.conv.toInt(*columns, mode=2)[source]

Bases:

BaseCli- blurb = 'Converts an iterator into a list of ints'

- __init__(*columns, mode=2)[source]

Converts every row into an integer. Example:

# returns [1, 3, -2] ["1", "3", "-2.3"] | toInt() | deref()

- Parameters:

columns – if nothing, then will convert each row. If available, then convert all the specified columns

mode – different conversion styles - 0: simple

float()function, fastest, but will throw errors if it can’t be parsed - 1: if there are errors, then replace it with zero - 2: if there are errors, then eliminate the row

See also:

toFloat()

- class k1lib.cli.conv.toRoman[source]

Bases:

BaseCli

- class k1lib.cli.conv.toBytes(dataType=None)[source]

Bases:

BaseCli- blurb = 'Converts several object types to bytes'

- __init__(dataType=None)[source]

Converts several object types to bytes. Example:

# converts string to bytes "abc" | toBytes() # converts image to bytes in jpg format torch.randn(200, 100) | toImg() | toBytes() # converts image to bytes in png format torch.randn(200, 100) | toImg() | toBytes("PNG") "some_file.mp3" | toAudio() | toBytes("mp3")

If it doesn’t know how to convert to bytes, it will just pickle it

Custom datatype

It is possible to build objects that can interoperate with this cli, like this:

class custom1: def __init__(self, config=None): ... def _toBytes(self): return b"abc" class custom2: def __init__(self, config=None): ... def _toBytes(self, dataType): if dataType == "png": return b"123" else: return b"456" custom1() | toBytes() # returns b"abc" custom2() | toBytes() # returns b"456" custom2() | toBytes("png") # returns b"123"

When called upon,

toByteswill detect that the input has the_toBytesmethod, which will prompt it to execute that method of the complex object. Of course, this means that you can return anything, not necessarily bytes, but to maintain intuitiveness, you should return either bytes or iterator of bytes- Parameters:

dataType – depending on input. If it’s an image then this can be png, jpg. If it’s a sound then this can be mp3, wav or things like that

- class k1lib.cli.conv.toDataUri[source]

Bases:

BaseCli- blurb = 'Converts several object types into data uri scheme'

- __init__()[source]

Converts incoming object into data uri scheme. Data uris are the things that look like “data:image/png;base64, …”, or “data:text/html;base64, …”. This is a convenience tool mainly for other tools, and not quite useful directly. Example:

randomImg = cat("https://mlexps.com/ergun.png", False) | toImg() # returns PIL image randomImg | toDataUri() # returns k1lib.cli.conv.DataUri object with .mime field "image/png" and .uri field "data:image/png;base64, ..." randomImg | toDataUri() | toHtml() # returns hmtl string `<img src="data:image/png;base64, ..."/>` randomImg | toHtml() # same like above. toHtml() actually calls toDataUri() behind the scenes randomImg | toDataUri() | toAnchor() # creates anchor tag (aka link elements "<a></a>") that, when clicked, displays the image in a new tab randomImg | toAnchor() # same as above. toAnchor() actually calls toDataUri() behind the scenes

- class k1lib.cli.conv.toAnchor(text: str = 'click here')[source]

Bases:

BaseCli- blurb = 'Converts several object types into a html anchor tag'

- __init__(text: str = 'click here')[source]

Converts incoming object into a html anchor tag that, when clicked, displays the incoming object’s html in another tab. Example:

randomImg = cat("https://mlexps.com/ergun.png", False) | toImg() # returns PIL image randomImg | toAnchor() # returns html string `<a href="data:image/png;base64, ..."></a>`

On some browsers, there’s sort of a weird bug where a new tab would open, but there’s nothing displayed on that tab. If you see this is happening, just press F5 or Ctrl+R to refresh the page and it should display everything nicely

- Parameters:

text – text to display inside of the anchor

- class k1lib.cli.conv.toHtml[source]

Bases:

BaseCli- blurb = 'Converts several object types to html'

- __init__()[source]

Converts several object types to html. Example:

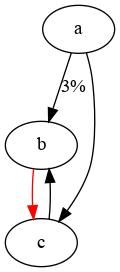

# converts PIL image to html <img> tag torch.randn(200, 100) | toImg() | toHtml() # converts graphviz graph to svg text (which is essentially html) g = k1.digraph(); g(*"abc"); g(*"bcd"); g | toHtml() # converts plotly graphs to html import plotly.express as px; import pandas as pd df = pd.DataFrame({'x': [1, 2, 3, 4, 5], 'y': [10, 11, 12, 14, 15]}) fig = px.line(df, x='x', y='y', title='Simple Line Chart') fig | toHtml() # converts matplotlib plot to image, and then to html. Do this if you want a static plot x = np.linspace(-2, 2); y = x**2 plt.plot(x, x**2); plt.gcf() | toImg() | toHtml() # converts matplotlib plot to D3.js html sketch plt.plot(x, x**2); plt.gcf() | toHtml()

- k1lib.cli.conv.toAscii()[source]

Converts complex unicode text to its base ascii form. Example:

"hà nội" | toAscii() # returns "ha noi"

Taken from https://stackoverflow.com/questions/2365411/convert-unicode-to-ascii-without-errors-in-python

- k1lib.cli.conv.toHash() str[source]

Converts some string into some hash string. Example:

"abc" | toHash() # returns 'gASVJAAAAAAAAABDILp4Fr+PAc/qQUFA3l2uIiOwA2Gjlhd6nLQQ/2HyABWtlC4='

Why not just use the builtin function

hash("abc")? Because it generates different hashes for different interpreter sessions, and that breaks many of my applications that need the hash value to stay constant forever.

- class k1lib.cli.conv.toCsv(allSheets=False)[source]

Bases:

BaseCli- blurb = 'Converts several object types into a table/dataframe'

- __init__(allSheets=False)[source]

Converts a csv file name into a table. Example:

"abc.csv" | toCsv() # returns table of values (Iterator[List[str]]) "abc.csv" | toCsv() # returns pd.DataFrame, if configure 'settings.toCsv.df = True' "def.xlsx" | toCsv() # returns table of values in the first sheet "def.xlsx" | toCsv(True) # returns List[Sheet name (str), table of values] ["a,b,c,d", "1,2,3,4"] | toCsv() | deref() # returns [['a', 'b', 'c', 'd'], ['1', '2', '3', '4']]

Warning

Note that this is pretty slow compared to just splitting by semicolons. If your dataset doesn’t have anything complicated like semicolons in quotes, then just do

op().split(",").all()If your dataset does have complicated quotes, then I’d suggest reading the csv using this cli, then convert it to a tsv file (tab-separated value). Then you can always just split the string using tab characters

- Parameters:

allSheets – if input is an Excel sheet, whether to read in all sheets or just the first sheet. No effect if input is a normal csv file

- class k1lib.cli.conv.toYaml(mode=None, safe=True)[source]

Bases:

BaseCli- blurb = 'Converts file name/yaml string to object and object to yaml string'

- __init__(mode=None, safe=True)[source]

Converts file name/yaml string to object and object to yaml string. Example:

"some_file.yaml" | toYaml() # returns python object cat("some_file.yaml") | join("\n") | toYaml(1) # returns python object {"some": "object", "arr": [1, 2]} | toYaml() # returns yaml string. Detected object coming in, instead of string, so will convert object into yaml string

- Parameters:

mode – None (default) for figure it out automatically, 0 for loading from file name, 1 for loading from raw yaml string, 2 for converting object to yaml string

safe – if True, always use safe_load() instead of load()

- class k1lib.cli.conv.toAudio(rate=None)[source]

Bases:

BaseCli- blurb = 'Reads audio from either a file or a URL or from bytes'

- __init__(rate=None)[source]

Reads audio from either a file or a URL or from bytes directly. Example:

au = "some_file.wav" | toAudio() # can display in a notebook, which will preview the first 10 second au | toBytes() # exports audio as .wav file au | toBytes("mp3") # exports audio as .mp3 file au.resample(16000) # resamples audio to new rate au | head(0.1) # returns new Audio that has the first 10% of the audio only au | splitW(8, 2) # splits Audio into 2 Audios, first one covering 80% and second one covering 20% of the track au.raw # internal pydub.AudioSegment object. If displayed in a notebook, will play the whole thing

You can also use this on any Youtube video or random mp3 links online and on raw bytes:

"https://www.youtube.com/watch?v=FtutLA63Cp8" | toAudio() # grab Bad Apple song from internet cat("some_file.wav", False) | toAudio() # grab from raw bytes of mp3 or wav, etc.

- class k1lib.cli.conv.toUnix(tz: str | tzfile = None, mode: int = 0)[source]

Bases:

BaseCli- blurb = 'Converts to unix timestamp'

- __init__(tz: str | tzfile = None, mode: int = 0)[source]

Tries anything piped in into a unix timestamp. If can’t convert then return None or the current timestamp (depending on mode). Example:

Local time zone independent:

"2023" | toUnix() # returns 2023, or 2023 seconds after unix epoch. Might be undesirable, but has to support raw ints/floats "2023-11-01T00Z" | toUnix() # midnight Nov 1st 2023 GMT "2023-11-01T00:00:00-04:00" | toUnix() # midnight Nov 1st 2023 EST "2023-11-01" | toUnix("US/Pacific") # midnight Nov 1st 2023 PST "2023-11-01" | toUnix("UTC") # midnight Nov 1st 2023 UTC

Local time zone dependent (assumes EST):

"2023-11" | toUnix() # if today's Nov 2nd EST, then this would be 1698897600, or midnight Nov 2nd 2023 EST "2023-11-04" | toUnix() # midnight Nov 4th 2023 EST

Feel free to experiment more, but in general, this is pretty versatile in what it can convert. With more effort, I’d probably make this so that every example given will not depend on local time, but since I just use this to calculate time differences, I don’t really care.

- Parameters:

tz – Timezone, like “US/Eastern”, “US/Pacific”. If not specified, then assumes local timezone. Get all available timezones by executing

toUnix.tzs()mode – if 0, then returns None if can’t convert, to catch errors quickly. If 1, then returns current timestamp instead

- class k1lib.cli.conv.toIso(tz: str | tzfile = None)[source]

Bases:

BaseCli- blurb = 'Converts unix timestamp to a human readable time string'

- __init__(tz: str | tzfile = None)[source]

Converts unix timestamp into ISO 8601 string format. Example:

1701382420 | toIso() # returns '2023-11-30T17:13:40', which is correct in EST time 1701382420 | toIso() | toUnix() # returns 1701382420, the input timestamp, showing it's correct 1701382420.123456789 | toIso() # still returns '2023-11-30T17:13:40'

As you might have noticed, this cli depends on the timezone of the host computer. If you want to get it in a different timezone, do this:

1701382420 | toIso("UTC") # returns '2023-11-30T22:13:40' 1701382420 | toIso("US/Pacific") # returns '2023-11-30T14:13:40'

- Parameters:

tz – Timezone, like “US/Eastern”, “US/Pacific”. If not specified, then assumes local timezone. Get all available timezones by executing

toUnix.tzs()

- class k1lib.cli.conv.toYMD(idx=None, mode=<class 'int'>)[source]

Bases:

BaseCli- blurb = 'Converts unix timestamp into tuple (year, month, day, hour, minute, second)'

- __init__(idx=None, mode=<class 'int'>)[source]

Converts unix timestamp into tuple (year, month, day, hour, minute, second). Example:

1701382420 | toYMD() # returns [2023, 11, 30, 17, 13, 40] in EST timezone 1701382420 | toYMD(0) # returns 2023 1701382420 | toYMD(1) # returns 11 1701382395 | toYMD(mode=str) # returns ['2023', '11', '30', '17', '13', '15']

- Parameters:

idx – if specified, take the desired element only. If 0, then take year, 1, then month, etc.

mode – either int or str. If str, then returns nicely adjusted numbers

- class k1lib.cli.conv.toLinks(f=None)[source]

Bases:

BaseCli- blurb = 'Extracts links and urls from a paragraph'

- __init__(f=None)[source]

Extracts links and urls from a paragraph. Example:

paragraph = [ "http://a.c", "http://a2.c some other text in between <a href='http://b.d'>some link</a> fdvb" ] # returns {'http://a.c', 'http://a2.c', 'http://b.d'} paragraph | toLinks() | deref()

If the input is a string instead of an iterator of strings, then it will

cat()it first, then look for links inside the result. For example:"https://en.wikipedia.org/wiki/Cheese" | toLinks()

At the time of writing, that returns a lot of links:

{'/wiki/Rind-washed_cheese', '#cite_ref-online_5-7', 'https://web.archive.org/web/20160609031000/http://www.theguardian.com/lifeandstyle/wordofmouth/2012/jun/27/how-eat-cheese-and-biscuits', 'https://is.wikipedia.org/wiki/Ostur', '/wiki/Meat_and_milk', '/wiki/Wayback_Machine', '/wiki/File:WikiCheese_-_Saint-Julien_aux_noix_01.jpg', 'https://gv.wikipedia.org/wiki/Caashey', '/wiki/Eyes_(cheese)', '/wiki/Template_talk:Condiments', '#Pasteurization', '/wiki/Tuscan_dialect', '#cite_note-23', '#cite_note-aha2017-48',

So, keep in mind that lots of different things can be considered a link. That includes absolute links (’https://gv.wikipedia.org/wiki/Caashey’), relative links within that particular site (‘/wiki/Tuscan_dialect’), and relative links within the page (‘#Pasteurization’).

How it works underneath is that it’s looking for a string like “https://…” and a string like “href=’…’”, which usually have a link inside. For the first detection style, you can specify extra protocols that you want to search for using

settings.cli.toLinks.protocols = [...].Also, this will detect links nested within each other multiple times. For example, the link ‘https://web.archive.org/web/20160609031000/http://www.theguardian.com/lifeandstyle/wordofmouth/2012/jun/27/how-eat-cheese-and-biscuits’ will appear twice in the result, once as itself, but also ‘https://www.theguardian.com/lifeandstyle/wordofmouth/2012/jun/27/how-eat-cheese-and-biscuits’

Note that if you really try, you will be able to find an example where this won’t work, so don’t expect 100% reliability. But for ost use cases, this should perform splendidly.

- class k1lib.cli.conv.toMovingAvg(col: int = None, alpha=0.9, debias=True, v: float = 0, dt: float = 1)[source]

Bases:

BaseCli- blurb = 'Smoothes out sequential data using some momentum and debias values'

- __init__(col: int = None, alpha=0.9, debias=True, v: float = 0, dt: float = 1)[source]

Smoothes out sequential data using momentum. Example:

# returns [4.8, 4.62, 4.458]. 4.8 because 0.9*5 + 0.1*3 = 4.8, and so on [3, 3, 3] | toMovingAvg(v=5, debias=False) | deref()

Sometimes you want to ignore the initial value, then you can turn on debias mode:

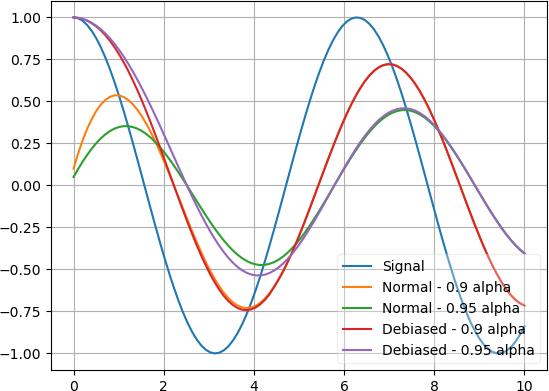

x = np.linspace(0, 10, 100); y = np.cos(x) plt.plot(x, y) plt.plot(x, y | toMovingAvg(debias=False) | deref()) plt.plot(x, y | toMovingAvg(debias=False, alpha=0.95) | deref()) plt.plot(x, y | toMovingAvg(debias=True) | deref()) plt.plot(x, y | toMovingAvg(debias=True, alpha=0.95) | deref()) plt.legend(["Signal", "Normal - 0.9 alpha", "Normal - 0.95 alpha", "Debiased - 0.9 alpha", "Debiased - 0.95 alpha"], framealpha=0.3) plt.grid(True)

As you can see, normal mode still has the influence of the initial value at 0 and can’t rise up fast, whereas the debias mode will ignore the initial value and immediately snaps to the first value.

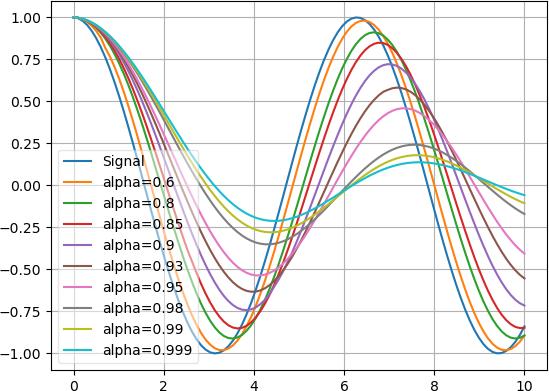

Also, the 2 graphs with 0.9 alpha snap together quicker than the 2 graphs with 0.95 alpha. Here’s the effect of several alpha values:

- Parameters:

col – column to apply moving average to

alpha – momentum term

debias – whether to turn on debias mode or not

v – initial value, doesn’t matter in debias mode

dt – pretty much never used, hard to describe, belongs to debias mode, checkout source code for details

- class k1lib.cli.conv.toCm(col: int, cmap=None, title: str = None, log: bool = False)[source]

Bases:

BaseCli- blurb = 'Converts the specified column to a bunch of color values, and adds a matplotlib colorbar automatically'

- __init__(col: int, cmap=None, title: str = None, log: bool = False)[source]

Converts the specified column to a bunch of color values, and adds a matplotlib colorbar automatically. “cm” = “color map”. Example:

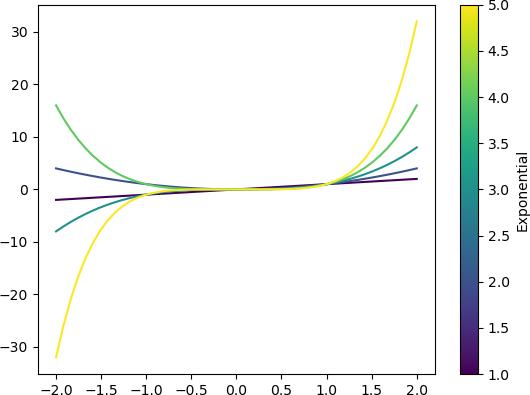

import matplotlib.cm as cm exps = [1, 2, 3, 4, 5] x = np.linspace(-2, 2) data = exps | apply(lambda exp: [exp, x, x**exp]) | deref() # without toCm(), plots fine, demonstrates underlying mechanism, but doesn't allow plotting a separate colorbar data | normalize(0, mode=1) | apply(cm.viridis, 0) | ~apply(lambda c,x,y: plt.plot(x, y, color=c)) | ignore() # with toCm(), draws a colorbar automatically data | toCm(0, cm.viridis, "Exponential") | ~apply(lambda c,x,y: plt.plot(x, y, color=c)) | ignore()

Functionality is kind of niche, but I need this over and over again, so have to make it

- Parameters:

col – column to convert float/int to color (tuple of 4 floats)

cmap – colormap to use. If not specified, defaults to

cm.viridistitle – title of the colorbar, optional

- class k1lib.cli.conv.toPdf[source]

Bases:

BaseCli- blurb = 'Reads a pdf file to a managed object and can do lots of downstream tasks from there'

- __init__()[source]

Reads a pdf file. Can do lots of downstream tasks. Example:

pdf = "someFile.pdf" | toPdf() len(pdf) # get number of pages pdf[2] | cat() # get text content of 2nd (0-indexed) page pdf[2] | toImg() # converts 2nd page to an image pdf[2].blocks() # grabs a list of text blocks, ordered top to bottom, like [[[x1, y1, x2, y2], "some text"], [...], ...]

- class k1lib.cli.conv.toDist(norm=2)[source]

Bases:

BaseCli- blurb = 'Calculates the euclidean distance of the input points'

- __init__(norm=2)[source]

Calculates the euclidean distance of the input points. Example:

a = np.random.randn(3) b = np.random.randn(3) [a, b] | toDist() # returns distance between those 2 points

Essentially just ((a-b)**2).sum()**0.5. But I kept needing this over and over again so gotta make it into a separate cli.

- class k1lib.cli.conv.toAngle(radians=True)[source]

Bases:

BaseCli- blurb = 'Calculates the angle between 2 vectors'

- class k1lib.cli.conv.idxsToNdArray(ds: tuple[int] = None, n: int = None)[source]

Bases:

BaseCli- blurb = 'Converts indices (aka point cloud) to numpy array'

- __init__(ds: tuple[int] = None, n: int = None)[source]

Converts indices (aka point cloud) to numpy array. Example:

[[1,2], [2,3]] | idxsToNdArray() # returns np.array([[0, 0, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]]) [[1,2], [2,3]] | idxsToNdArray(n=2) # returns np.array([[0, 0, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]]) [[1,2], [2,3]] | idxsToNdArray(ds=[3,4]) # returns np.array([[0, 0, 0, 0], [0, 0, 1, 0], [0, 0, 0, 1]])

So, the standard use case is that you have a point cloud (points [1,2] and [2,3]) and you want to get the dense array with those points filled in. Then you can do it with this function. Notice how in all 3 examples, the points are marked with a 1. You can specify either the dense array’s shape using parameter “.ds”, or just the number of dimensions with parameter “.n”. If you specify neither then it will auto figure that out, but the final shape might not be what you wanted.

Let’s see some other use cases:

[[1,2,3], [2,3,4]] | idxsToNdArray() | shape() # returns (3, 4, 5) [[1,2,3], [2,3,4]] | idxsToNdArray(n=2) # returns np.array([[0, 0, 0, 0], [0, 0, 3, 0], [0, 0, 0, 4]]) [[1,2,3], [2,3,4]] | idxsToNdArray(n=1) # returns np.array([[0, 0], [2, 3], [3, 4]]) [[1,2,3,4], [2,3,4,5]] | idxsToNdArray(n=2) # returns np.array([[[0, 0], [0, 0], [0, 0], [0, 0]], [[0, 0], [0, 0], [3, 4], [0, 0]], [[0, 0], [0, 0], [0, 0], [4, 5]]])

In the first example, if you don’t specify the dimensions, it will return a 3d array, and the selected points will have the value 1. But if you insist that it should have 2 dimensions only, and the remaining columns should be the selected points’ values, then you can either limit .n, or specify the shape .ds but only has length of 2. Notice how the second example got filled in by values 3 and 4 and not 1.

- Parameters:

ds – dimensions

n – number of dimensions

- class k1lib.cli.conv.toFileType[source]

Bases:

BaseCli- blurb = 'Grab file type of a file or file contents (bytes)'

- __init__()[source]

Grab file type of a file or file contents. Example:

# returns "PNG image data, 1024 x 1365, 8-bit/color RGBA, non-interlaced" "some_image.png" | toFileType() # returns "JPEG image data, JFIF standard 1.01, aspect ratio, density 1x1, segment length 16, baseline, precision 8, 1024x1365, components 3" "some_image.png" | toImg() | toBytes() | toFileType()

This does take quite a while to execute, up to 42ms/file, so if you’re doing it a lot, would suggest you use

applyMpor something like that. Internally, this will call the command line programfileand returns its results, so this is just a convenience cli.

- class k1lib.cli.conv.toQr[source]

Bases:

BaseCli

- class k1lib.cli.conv.toExcel[source]

Bases:

BaseCli- __init__()[source]

2 modes:

Reads an excel file and returns an

ExcelFileobject that can do many things.

This mode is activated when the input is a string, which it is interpreted as a file name. Example:

workbook = "somefile.xlsx" | toExcel() # reads the file worksheet = workbook | ls() # lists out all sheets within the workbook worksheet | cat() # grabs all cells' values, returns List[List[Any]]

2) Converts a python table to excel sheet in bytes. Example:

# returns bytes of the excel file, merging A1:B1, with all correct column widths [["A", None, "B"], [1, 2, 3]] | toExcel() # saves to the specified file [["A", None, "B"], [1, 2, 3]] | toExcel() | file("somefile.xlsx")

- class k1lib.cli.conv.toMdTable[source]

Bases:

object- __init__()[source]

Converts incoming table to a nice markdown table. Example:

["ABC", [1,2,3], "456", "789"] | toMdTable()

That returns:

['| A | B | C |', '| -- | -- | -- |', '| 1 | 2 | 3 |', '| 4 | 5 | 6 |', '| 7 | 8 | 9 |']

Honestly this is just a convenience function, as you can typically just do

table | display()and that’d be enough in a jupyter environment. But I was trying to use obsidian and want to generate a table that obsidian can understand

mgi module

All tools related to the MGI database. Expected to use behind the “mgi” module name, like this:

from k1lib.imports import *

["SOD1", "AMPK"] | mgi.batch()

filt module

This is for functions that cuts out specific parts of the table

- class k1lib.cli.filt.filt(predicate: Callable[[Any], bool], column: int | List[int] = None, catchErrors: bool = False)[source]

Bases:

BaseCli- __init__(predicate: Callable[[Any], bool], column: int | List[int] = None, catchErrors: bool = False)[source]

Filters out elements. Examples:

# returns [2, 6], grabbing all the even elements [2, 3, 5, 6] | filt(lambda x: x%2 == 0) | deref() # returns [3, 5], grabbing all the odd elements [2, 3, 5, 6] | ~filt(lambda x: x%2 == 0) | deref() # returns [[2, 'a'], [6, 'c']], grabbing all the even elements in the 1st column [[2, "a"], [3, "b"], [5, "a"], [6, "c"]] | filt(lambda x: x%2 == 0, 0) | deref() # throws error, because strings can't mod divide [1, 2, "b", 8] | filt(lambda x: x % 2 == 0) | deref() # returns [2, 8] [1, 2, "b", 8] | filt(lambda x: x % 2 == 0, catchErrors=True) | deref()

You can also pass in

opor string, for extra intuitiveness and quickness:# returns [2, 6] [2, 3, 5, 6] | filt(op() % 2 == 0) | deref() # returns ['abc', 'a12'] ["abc", "def", "a12"] | filt(op().startswith("a")) | deref() # returns [3, 4, 5, 6, 7, 8, 9] range(100) | filt(3 <= op() < 10) | deref() # returns [3, 4, 5, 6, 7, 8, 9] range(100) | filt("3 <= x < 10") | deref()

See

aSfor more details on string mode. If you pass innumpy.ndarrayortorch.Tensor, then it will automatically use the C-accelerated versions if possible, like this:# returns np.array([2, 3, 4]), instead of iter([2, 3, 4]) np.array([1, 2, 3, 4]) | filt(lambda x: x>=2) | deref() # returns [2, 3, 4], instead of np.array([2, 3, 4]), because `math.exp` can't operate on numpy arrays np.array([1, 2, 3, 4]) | filt(lambda x: math.exp(x) >= 3) | deref()

If you need more extensive filtering capabilities involving text, check out

grepIf “filt” is too hard to remember, this cli also has an alias

filter_that kinda mimics Python’sfilter().- Parameters:

predicate – function that returns True or False

column – if not specified, then filters elements of the input array, else filters the specific column only (or columns, just like in

apply)catchErrors – whether to catch errors in the function or not (reject elements that raise errors). Runs slower if enabled though

- split()[source]

Splits the input into positive and negative samples. Example:

# returns [[0, 2, 4, 6, 8], [1, 3, 5, 7, 9]] range(10) | filt(lambda x: x%2 == 0).split() | deref() # also returns [[0, 2, 4, 6, 8], [1, 3, 5, 7, 9]], exactly like above range(10) | filt(lambda x: x%2 == 0) & filt(lambda x: x%2 != 0) | deref()

- class k1lib.cli.filt.inSet(values: Set[Any], column: int = None, inverse=False)[source]

Bases:

filt

- class k1lib.cli.filt.contains(s: str, column: int = None, inverse=False)[source]

Bases:

filt

- class k1lib.cli.filt.empty(reverse=False)[source]

Bases:

BaseCli- __init__(reverse=False)[source]

Filters out streams that is not empty. Almost always used inverted, but “empty” is a short, sweet name that’s easy to remember. Example:

# returns [[1, 2], ['a']] [[], [1, 2], [], ["a"]] | ~empty() | deref()

- Parameters:

reverse – not intended to be used by the end user. Do

~empty()instead.

- k1lib.cli.filt.isNumeric(column: int = None) filt[source]

Filters out a line if that column is not a number. Example:

# returns [0, 2, '3'] [0, 2, "3", "a"] | isNumeric() | deref()

- k1lib.cli.filt.instanceOf(cls: type | Tuple[type], column: int = None) filt[source]

Filters out lines that is not an instance of the given type. Example:

# returns [2] [2, 2.3, "a"] | instanceOf(int) | deref() # returns [2, 2.3] [2, 2.3, "a"] | instanceOf((int, float)) | deref()

- class k1lib.cli.filt.head(n=10)[source]

Bases:

BaseCli- __init__(n=10)[source]

Only outputs first

nelements. You can also negate it (like~head(5)), which then only outputs after firstnlines. Examples:"abcde" | head(2) | deref() # returns ["a", "b"] "abcde" | ~head(2) | deref() # returns ["c", "d", "e"] "0123456" | head(-3) | deref() # returns ['0', '1', '2', '3'] "0123456" | ~head(-3) | deref() # returns ['4', '5', '6'] "012" | head(None) | deref() # returns ['0', '1', '2'] "012" | ~head(None) | deref() # returns []

You can also pass in fractional head:

range(20) | head(0.25) | deref() # returns [0, 1, 2, 3, 4], or the first 25% of samples

Also works well and fast with

numpy.ndarray,torch.Tensorand other sliceable types:# returns (10,) np.linspace(1, 3) | head(10) | shape()

- class k1lib.cli.filt.tail(n: int = 10)[source]

Bases:

BaseCli

- class k1lib.cli.filt.cut(*columns: List[int])[source]

Bases:

BaseCli- __init__(*columns: List[int])[source]

Cuts out specific columns, sliceable. Examples:

["0123456789", "abcdefghij"] | cut(5, 8) | deref() # returns [['5', '8'], ['f', 'i']] ["0123456789", "abcdefghij"] | cut(8, 5) | deref() # returns [['8', '5'], ['i', 'f']], demonstrating permutation-safe ["0123456789"] | cut(5, 8) | deref() # returns [['5', '8']] ["0123456789"] | cut(8, 5) | deref() # returns [['8', '5']], demonstrating permutation-safe ["0123456789", "abcdefghij"] | cut(2) | deref() # returns ['2', 'c'], instead of [['2'], ['c']] as usual ["0123456789"] | cut(2) | deref() # returns ['2'] ["0123456789"] | cut(5, 8) | deref() # returns [['5', '8']] ["0123456789"] | ~cut()[:7:2] | deref() # returns [['1', '3', '5', '7', '8', '9']]

In the first example, you can imagine that we’re operating on this table:

0123456789 abcdefghij

Then, we want to grab the 5th and 8th column (0-indexed), which forms this table:

58 fi

So, result of that is just

[['5', '8'], ['f', 'i']]In the fourth example, if you’re only cutting out 1 column, then it will just grab that column directly, instead of putting it in a list.

If you pass in

numpy.ndarrayortorch.Tensor, then it will automatically use the C-accelerated versions, like this:torch.randn(4, 5, 6) | cut(2, 3) # returns tensor of shape (4, 2, 6) torch.randn(4, 5, 6) | cut(2) # returns tensor of shape (4, 6) torch.randn(4, 5, 6) | ~cut()[2:] # returns tensor of shape (4, 2, 6)

Warning

TD;DR: inverted negative indexes are a bad thing when rows don’t have the same number of elements

Everything works fine when all of your rows have the same number of elements. But things might behave a little strangely if they don’t. For example:

# returns [['2', '3', '4'], ['2', '3', '4', '5', '6', '7']]. Different number of columns, works just fine ["0123456", "0123456789"] | cut()[2:-2] | deref() # returns [['0', '1', '8', '9'], ['a', 'b', 'i', 'j']]. Same number of columns, works just fine ["0123456789", "abcdefghij"] | ~cut()[2:-2] | deref() # returns [['0', '1', '5', '6'], ['0', '1', '5', '6', '7', '8', '9']]. Different number of columns, unsupported invert case ["0123456", "0123456789"] | ~cut()[2:-2] | deref()

Why does this happen? It peeks at the first row, determines that ~[2:-2] is equivalent to [:2] and [5:] combined and not [:2] and [-2:] combined. When applied to the second row, [-2:] goes from 5->9, hence the result. Another edge case would be:

# returns [['0', '1', '2', '3', '5', '6'], ['0', '1', '2', '3', '5', '6', '7', '8', '9']] ["0123456", "0123456789"] | ~cut(-3) | deref()

Like before, it peeks the first row and translate ~(-3) into ~4, which is equivalent to [:4] and [5:]. But when applied to the second row, it now carries the meaning ~4, instead of ~(-3).

Why don’t I just fix these edge cases? Because the run time for it would be completely unacceptable, as we’d have to figure out what’s the columns to include in the result for every row. This could easily be O(n^3). Of course, with more time optimizing, this could be solved, but this is the only extreme edge case and I don’t feel like putting in the effort to optimize it.

- class k1lib.cli.filt.rows(*rows: List[int])[source]

Bases:

BaseCli- __init__(*rows: List[int])[source]

Selects specific elements given an iterator of indexes. Space complexity O(1) as a list is not constructed (unless you’re slicing it in really weird way). Example:

"0123456789" | rows(2) | toList() # returns ["2"] "0123456789" | rows(5, 8) | toList() # returns ["5", "8"] "0123456789" | rows()[2:5] | toList() # returns ["2", "3", "4"] "0123456789" | ~rows()[2:5] | toList() # returns ["0", "1", "5", "6", "7", "8", "9"] "0123456789" | ~rows()[:7:2] | toList() # returns ['1', '3', '5', '7', '8', '9'] "0123456789" | rows()[:-4] | toList() # returns ['0', '1', '2', '3', '4', '5'] "0123456789" | ~rows()[:-4] | toList() # returns ['6', '7', '8', '9']

Why it’s called “rows” is because I couldn’t find a good name for it. There was

cut, which the name of an actual bash cli that selects out columns given indicies. When I needed a way to do what this cli does, it was in the context of selecting out rows, so the name stuck.If you want to just pick out the nth item from the iterator, instead of doing this:

iter(range(10)) | rows(3) | item() # returns 3

… you can use the shorthand

rIteminstead:iter(range(10)) | rItem(3) # returns 3

- Parameters:

rows – ints for the row indices

- class k1lib.cli.filt.intersection(column=None, full=False)[source]

Bases:

BaseCli- __init__(column=None, full=False)[source]

Returns the intersection of multiple streams. Example:

# returns set([2, 4, 5]) [[1, 2, 3, 4, 5], [7, 2, 4, 6, 5]] | intersection() # returns ['2g', '4h', '5j'] [["1a", "2b", "3c", "4d", "5e"], ["7f", "2g", "4h", "6i", "5j"]] | intersection(0) | deref()

If you want the full distribution, meaning the intersection, as well as what’s left of each stream, you can do something like this:

# returns [{2, 4, 5}, [1, 3], [7, 6]] [[1, 2, 3, 4, 5], [7, 2, 4, 6, 5]] | intersection(full=True) | deref()

- Parameters:

column – what column to apply the intersection on. Defaulted to None

full – if specified, return the full distribution, instead of the intersection alone

- class k1lib.cli.filt.union[source]

Bases:

BaseCli

- class k1lib.cli.filt.unique(column: int = None)[source]

Bases:

BaseCli- __init__(column: int = None)[source]

Filters out non-unique row elements. Example:

# returns [[1, "a"], [2, "a"]] [[1, "a"], [2, "a"], [1, "b"]] | unique(0) | deref() # returns [0, 1, 2, 3, 4] [*range(5), *range(3)] | unique() | deref()

In the first example, because the 3rd element’s first column is 1, which has already appeared, so it will be filtered out.

- Parameters:

column – the column to detect unique elements. Can be None, which will behave like converting the input iterator into a set, but this cli will maintain the order

- class k1lib.cli.filt.breakIf(f, col: int = None)[source]

Bases:

BaseCli- __init__(f, col: int = None)[source]

Breaks the input iterator if a condition is met. Example:

# returns [0, 1, 2, 3, 4, 5] [*range(10), 2, 3] | breakIf(lambda x: x > 5) | deref() # returns [[1, 'a'], [2, 'b']] [[1, "a"], [2, "b"], [3, "c"], [2, "d"], [1, "e"]] | breakIf("x > 2", 0) | deref()

- Parameters:

col – column to apply the condition on

- class k1lib.cli.filt.mask(mask: Iterator[bool])[source]

Bases:

BaseCli- __init__(mask: Iterator[bool])[source]

Masks the input stream. Example:

# returns [0, 1, 3] range(5) | mask([True, True, False, True, False]) | deref() # returns [2, 4] range(5) | ~mask([True, True, False, True, False]) | deref() # returns torch.tensor([0, 1, 3]) torch.tensor(range(5)) | mask([True, True, False, True, False])

- class k1lib.cli.filt.tryout(result=None, retries=0, mode='result')[source]

Bases:

BaseCli- __init__(result=None, retries=0, mode='result')[source]

Wraps every cli operation after this in a try-catch block, returning

resultif the operation fails. Example:# returns 9 3 | (tryout("failed") | op()**2) # returns "failed", instead of raising an exception "3" | (tryout("failed") | op()**2) # special mode: returns "unsupported operand type(s) for ** or pow(): 'str' and 'int'" "3" | (tryout(mode="str") | op()**2) # special mode: returns entire trace stack (do `import traceback` first) "3" | (tryout(mode="traceback") | op()**2) # special mode: returns "3", the input of the tryout() block "3" | (tryout(mode="input") | op()**2)

By default, this

tryout()object will gobble up all clis behind it and wrap them inside a try-catch block. This might be undesirable, so you can stop it early:# returns "failed" 3 | (tryout("failed") | op()**2 | aS(str) | op()**2) # raises an exception, because it errors out after the tryout()-captured operations 3 | (tryout("failed") | op()**2) | aS(str) | op()**2

In the first example,

tryoutwill catch any errors happening withinop(),aS(str)or the secondop()**2. In the second example,tryoutwill only catch errors happening within the firstop()**2.Array mode

The above works well for atomic operations and not looping operations. Let’s say we have this function:

counter = 0 def f(x): global counter if x > 5: counter += 1 if counter < 3: raise Exception(f"random error: {x}") return x**2

This code will throw an error if x is greater than 5 for the first and second time (but runs smoothly after that. It’s a really nasty function I know). Capturing like this will work:

counter = 0 # line below returns [0, 1, 4, 9, 16, 25, 'failed', 'failed', 64, 81] range(10) | apply(tryout("failed") | aS(f)) | deref()

But capturing like this won’t work:

counter = 0 # line below throws an exception range(10) | (tryout("failed") | apply(f)) | deref()

The reason being,

tryoutwill only capture errors when the data is passed intoapply(f), and won’t capture it later on. However, when data is passed toapply(f), it hasn’t executed anything yet (remember these things are lazily executed). So the exception actually happens when you’re trying toderef()it, which lies outside oftryout’s reach. You can just put a tilde in front to tell it to capture errors for individual elements in the iterator:counter = 0 # line belows returns [0, 1, 4, 9, 16, 25, 'failed', 'failed', 64, 81] range(10) | (~tryout("failed") | apply(f)) | deref()

This mode has a weird quirk that requires that there has to be a 1-to-1 correspondence between the input and output for the block of code that it wraps around. Meaning this is okay:

def g(x): global counter if 40 > x[0] >= 30: counter += 1 if counter < 5: raise Exception("random error") return x counter = 0 # returns 50, corrects errors as if it's not even there! range(50) | (~tryout(None, 6) | batched(10, True) | apply(g) | joinStreams()) | deref() | shape(0)

This is okay because going in, there’re 50 elements, and it’s expected that 50 elements goes out of

tryout. The input can be of infinite length, but there has to be a 1-to-1 relationship between the input and output. While this is not okay:counter = 0 # returns 75, data structure corrupted range(50) | (~tryout(None, 6) | batched(10, True) | apply(g) | joinStreams() | batched(2, True)) | joinStreams() | deref() | shape(0)

It’s not okay because it’s expected that 25 pairs of elements goes out of

tryout- Parameters:

result – result to return if there is an exception

mode – if “result” (default), returns the result if there’s an exception. If “str” then returns the exception’s string. If “input” then returns the original input. If “traceback” then returns the exception’s traceback

retries – how many time to retry before giving up?

- k1lib.cli.filt.resume(fn)[source]

Resumes a long-running operation. I usually have code that looks like this:

def f(x): pass # long running, expensive calculation ls(".") | applyMp(f) | apply(dill.dumps) | file("somefile.pth") # executing cat.pickle("somefile.pth") | aS(list) # getting all of the saved objects

This will read all the files in the current directory, transforms them using the long-running, expensive function, potentially doing it in multiple processes. Then the results are serialized (turns into bytes) and it will be appended to an output file.

What’s frustrating is that I do stupid things all the time, so the process usually gets interrupted. But I don’t want to redo the existing work, so that’s where this cli comes into play. Now it looks like this instead:

ls(".") | resume("somefile.pth") | applyMp(f) | apply(dill.dumps) >> file("somefile.pth")